Estimating Statistical Power When Using Multiple Testing Procedures

This post is one in a series highlighting MDRC’s methodological work. Contributors discuss the refinement and practical use of research methods being employed across our organization.

Researchers are often interested in testing the effectiveness of an intervention on multiple outcomes, for multiple subgroups, at multiple points in time, or across multiple treatment groups. The resulting multiplicity of statistical hypothesis tests can increase the likelihood of spurious findings: that is, finding statistically significant effects that do not in fact exist. Multiple testing procedures (MTPs) are statistical procedures that counteract this problem by adjusting p-values for effect estimates upward. Without the use of an MTP, the probability of false positive findings increases, sometimes dramatically, with the number of tests.

Yet the use of an MTP can result in a substantial change in statistical power, greatly reducing the probability of detecting effects when they do exist. Thus, there is a trade-off between a lower probability of detecting a true effect when adjusting and a higher probability of a false positive when not adjusting. Unfortunately, when designing studies, researchers using MTPs frequently ignore their power implications. In some cases sample sizes may be too small and studies may be underpowered to detect the desired size of an effect. In other cases, sample sizes may be larger than needed and studies may be powered to detect smaller effects than anticipated.

But the use of an MTP need not always mean a loss of power. Individual power is lost — the probability of detecting an effect of a particular size or larger for each hypothesis test. However, in studies with multiplicity, alternative definitions of power exist and in some cases may be more appropriate.[1] For example, when testing for effects on multiple outcomes, one might consider 1-minimal power: the probability of detecting effects of at least a particular size (which can vary by outcome) on at least one outcome. Or one might consider ½-minimal power: the probability of detecting effects of at least a particular size on at least half the outcomes. Finally, one might consider complete power: the power to detect effects of at least a particular size on all outcomes. How to define power depends on the objectives of the study and on how the success of the intervention is defined. The choice of definition also affects the study’s overall level of power.

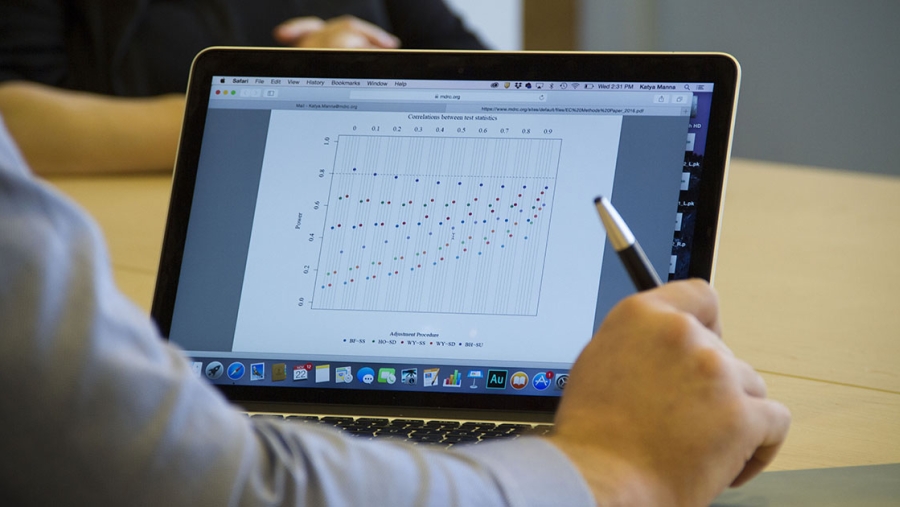

At MDRC, with two consecutive Statistical and Research Methodology in Education grants from the Institute of Education Sciences (IES) (R305D140024 and R305D170030), we have been developing methods for estimating statistical power for multiple definitions of power when applying any of five common MTPs — Bonferroni (Dunn 1959, 1961), Holm (1979), single-step and step-down versions of Westfall-Young (1993), and Benjamini-Hochberg (1995). Our methods are described in detail in an article and a working paper, focusing on multiplicity that results from estimating effects when using a simple and common research design: a multisite, randomized controlled trial in which individuals are randomized in blocks and effects are estimated using a model with block-specific intercepts and the assumption of constant effects across blocks.

We used these methods to produce empirical findings about how various factors in studies with multiplicity affect overall statistical power. The results point to several recommendations for practice, outlined below. In addition, with IES support, MDRC is exploring other applications for these methods and recommendations. We are in the process of creating and publishing user-friendly, open-source software for applied researchers and developing an interactive web application to allow users to create plots of power, minimum detectable effect sizes, or sample size requirements for studies with multiplicity.

Recommendations for Practice

Prespecify all hypothesis tests and prespecify a plan for making multiplicity adjustments. Researchers who plan to use an MTP should account for its effects on statistical power when determining the study’s sample size.

Think about the definition of success for the intervention under study and choose a corresponding definition of statistical power. The prevailing default in many studies — individual power — may or may not be the most appropriate type of power. If the researchers’ goal is to find statistically significant estimates of effects on all primary outcomes of interest, then even after taking multiplicity adjustments into account, estimates of individual power can grossly understate the actual power required — complete power. On the other hand, if the researchers’ goal is to find statistically significant estimates of effects on at least one or a small proportion of outcomes, their power may be much better than anticipated. They may be able to get away with a smaller sample size, or they may be able to detect smaller effects.

In some cases, it may be best for researchers to estimate and share power estimates for multiple power definitions. For example, even if a program would be considered successful should an effect of a specified size be found for at least one outcome (requiring 1-minimal power), researchers may still want to know the probability of detecting effects on each particular outcome (requiring individual power). Or consider the case in which a sample size is fixed and the probability of detecting statistically significant effects on all outcomes (complete power) is unacceptably low. It still may be valuable for researchers to be able to achieve a high probability of detecting effects on at least half the outcomes (½-minimal power).

Do not necessarily choose the MTP that results in the most power. Most MTPs control the family-wise error rate, or the probability of at least one false positive. The Benjamini-Hochberg MTP, which generally results in the most power, controls the false discovery rate, which is the expected proportion of rejected null hypotheses that are wrongly rejected. An MTP that controls the false discovery rate is more lenient with false positives than one that controls the family-wise error rate. Researchers may tolerate a few false positives when testing for effects on a large number of outcomes. But when the number of outcomes is small, a single false positive is more likely to lead to the wrong conclusion about an intervention’s effectiveness. In that case, controlling the family-wise error rate is likely to be preferable, by using one of the Bonferroni, Holm, or Westfall-Young MTPs. Among these, the Westfall-Young step-down procedure generally results in the most power. However, if a low or moderate correlation between outcomes is expected, or if the study will use a 1-minimal definition of power, the simpler Holm MTP or the single-step Westfall-Young MTP may suffice.

Consider the possibility that there may not be impacts on all outcomes. Researchers may be inclined to assume that there will be effects on all outcomes, as hypotheses of effects probably drive the selection of outcomes in the first place. And when estimating power for a single hypothesis test, power is defined only when a true effect exists. However, in stepwise MTPs (Benjamini-Hochberg and one version of Westfall-Young), the p-value adjustments are made in a series where each depends on the one before. If some outcomes show no effects, the probability of detecting the effects that do actually exist can be diminished, sometimes substantially (see Porter 2017).

Take all of the above into account in the design phase of a study when estimating power, sample size requirements, or minimum detectable effect sizes. Working through these recommendations is not a linear process; each affects the others. For example, using a 1-minimal definition of power will allow researchers to consider more outcomes without any power loss, whereas other definitions may mean that they want to be parsimonious in selecting their primary outcomes. As with all facets of study design, it is crucial to understand the trade-offs involved.